Pelican PiCam, for slimmer high performance cameras.

I guess this goes as part 3 of our quest of high quality cameras against the challenge of slim, bump reduced/free phones.

Related:

This popped up on reddit over the weekend. Oddly at a similar time to a comment by Rob Beijendorf which mentioned Pelican’s slimmer modules. Nokia prior to the DS buyout had invested into Pelican Imaging. Harlow is constantly praising the research Nokia Imaging peeps do regarding both hardware and computational imaging.

It’s the 16 array camera that provides a lot of depth information. But it’s so much more.

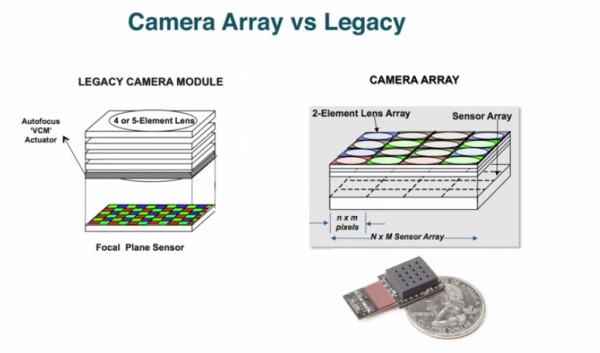

- The traditional lens is divided into 16 lenses, each with a particular aperture.

- Each lens corresponds to a subsensor and underlying sensor array

- Each subsensor is independently controlled

- Individual sensors are optically isolated

- Colour filters are a single colour for each camera, eliminating colour cross talk.

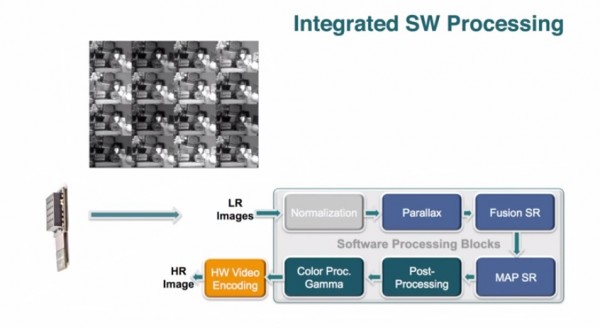

- The raw data is read out and processed that synthesises a high resolution colour image, which also produces a depth map.

Production of a high res image:

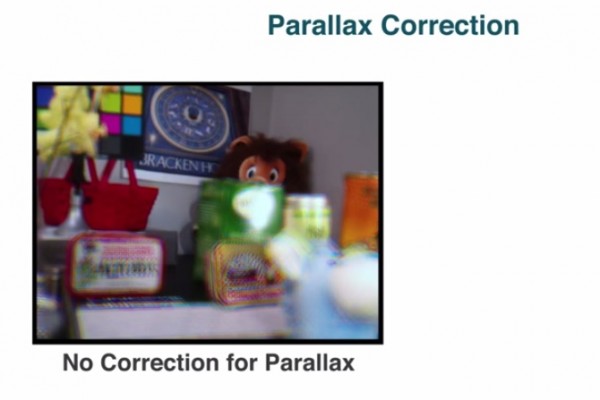

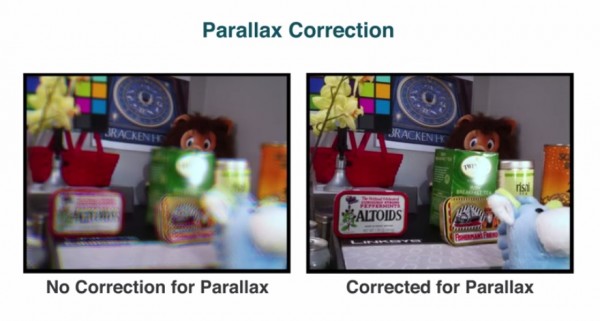

Differences in parallax must be corrected. There’s slightly different view points for each camera and software cuts that out.

Without correction, it would look like a bit of a blur with images slightly superimposed.

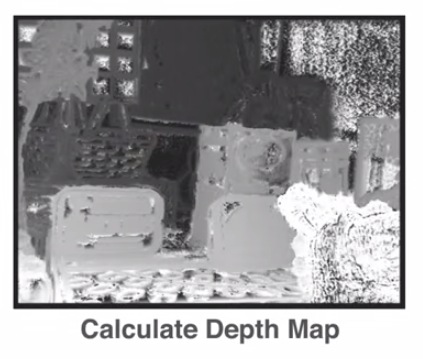

To correct this, they compute a depth map.

Here’s the corrected image side by side with the raw image.

After depth information, the restoration stage can begin. Pixels are fused from each of the 16 low resolution cameras into a high resolution grid.

Below, it’s just the green channel.

The above is just a small subsection.

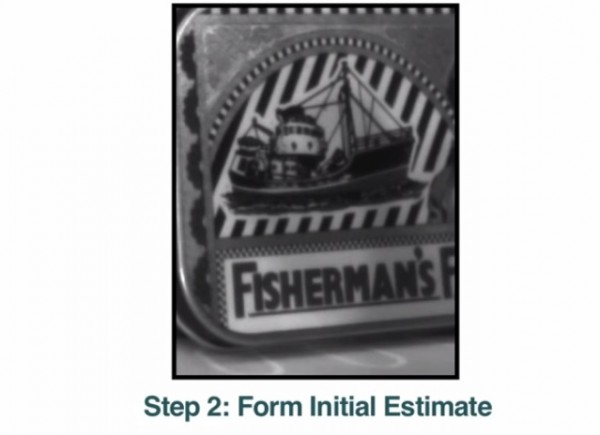

Once fused onto a common grid, the remaining holes are filled.

These are the ‘filled in holes’

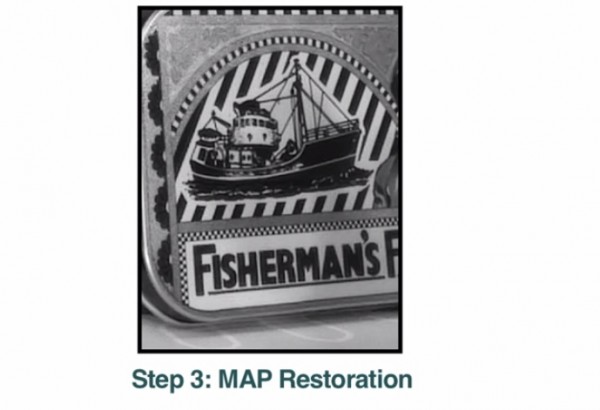

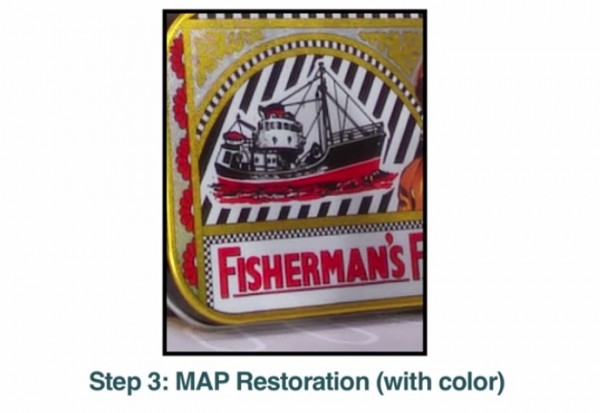

This is further refined with the map restoration algorithm to form an optimal reconstruction of the original scene.

Finally, the colour is restored.

Things we’ve seen before?

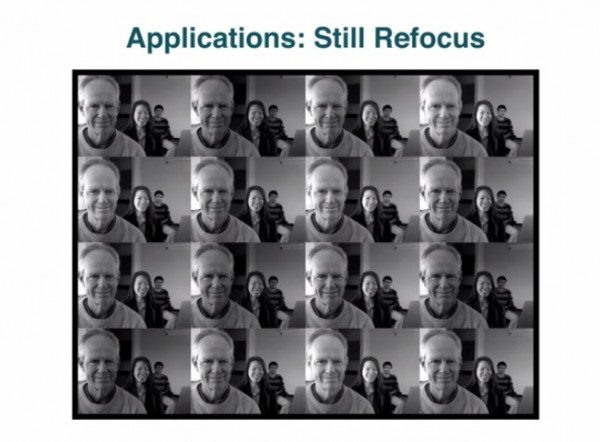

- With 16 cameras, PiCam can produce a picture of different depth information. So focusing afterwards. Instead of the way Nokia camera takes multiple photos at different focus point, the 16 cameras can all take individual focus points, correct the image and produce a picture that you can focus afterwards.

- Everything is in focus but you can also create synthetic focus effects.

PiCam can refocus video as well.

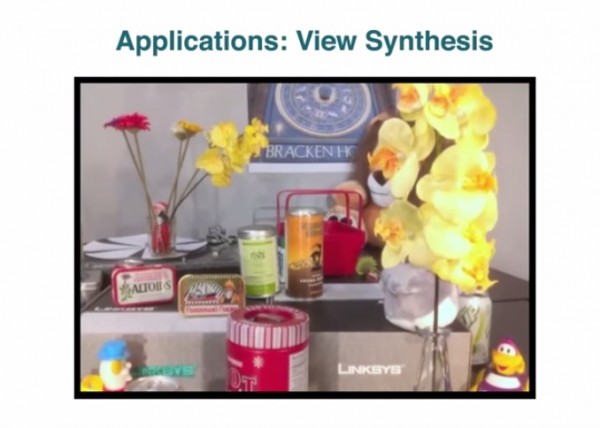

There’s virtual view points. A sort of 3D view as you can pan up/down/left/right and see around objects etc.

The motion parallax gives a sense of depth. No special glasses or screen necessary.

The depth information and image can also be used to create 3D objects/improved augmented reality.

Concerns?

- What will the actual performance be like?

- What about low light?

- What about action shots?

- How much detail can we get?

- What about OIS? (Some comments in youtube video suggested further computational IS)

Connect

Connect with us on the following social media platforms.